A Practical Framework for Secure AI Adoption

blog/a-practical-framework-for-secure-ai-adoption

2025-08-01

Generative AI is transforming the way businesses operate: automating workflows, enhancing decision-making, and accelerating productivity.

But as powerful as these tools are, they introduce a new class of risks.

AI systems rely on absorbing vast amounts of data, and in many cases, that data includes sensitive company information that users unknowingly are compromising what prompting tools like ChatGPT.

In a world where employees have an unlimited number of AI tools to draw from, data privacy is elevated to the top concern for businesses considering or implementing AI solutions.

Recent analyses underscore the urgency of this concern, revealing that as much as 8.5% of generative AI (GenAI) prompts include sensitive data.

This sensitive information ranges from customer data to financial records, proprietary code, and even personally identifiable information (PII).

Organizations that lack established policies regulating AI usage, approved tool lists, robust enterprise data protection mechanisms, and comprehensive employee training are highly susceptible to significant and potentially costly data breaches.

The potential for inadvertent data leakage is substantial, making secure AI adoption not merely a technical consideration but a strategic necessity.

Beyond legal and reputational threats to organizations, AI tools also pose significant privacy risks to individual consumers who might be unaware how their information is used and stored.

The Red Pill Labs Framework: 4 Pillars of Secure AI Adoption

Pillar 1: Create a Clear AI Usage Policy

Formal policy regulating AI usage is essential to ensure readiness for AI Adoption.

Especially before formal adoption and integration into business systems, employees will have access to non-enterprise AI tools which pose an even greater security risk.

Define Approved Tools: Make it clear which AI tools are allowed, and why.

For example, only allow the use of Enterprise-Grade accounts for work-related tasks.

Clarify Permissible Data Inputs: Establish data minimization principles to determine what types of data can and cannot be entered into AI tools

This is especially important for customer data, personally identifying information (PII), and financial information.

Set Ethical Guidelines: Go beyond compliance by addressing algorithmic bias, transparency, traceability, and accountability.

For example, ask yourselves whether clients should be presented with AI content, and if so, what kind of review is required to ensure accuracy?

A well-defined and consistently enforced AI usage policy serves as the foundational element for secure AI adoption, guiding employee behavior and establishing clear organizational practices.

Pillar 2: Fortifying Enterprise Data Protection for AI

Effective enterprise data protection for AI systems requires a deep understanding of how different AI tools handle data, strategic choices regarding deployment models, and the implementation of robust technical safeguards.

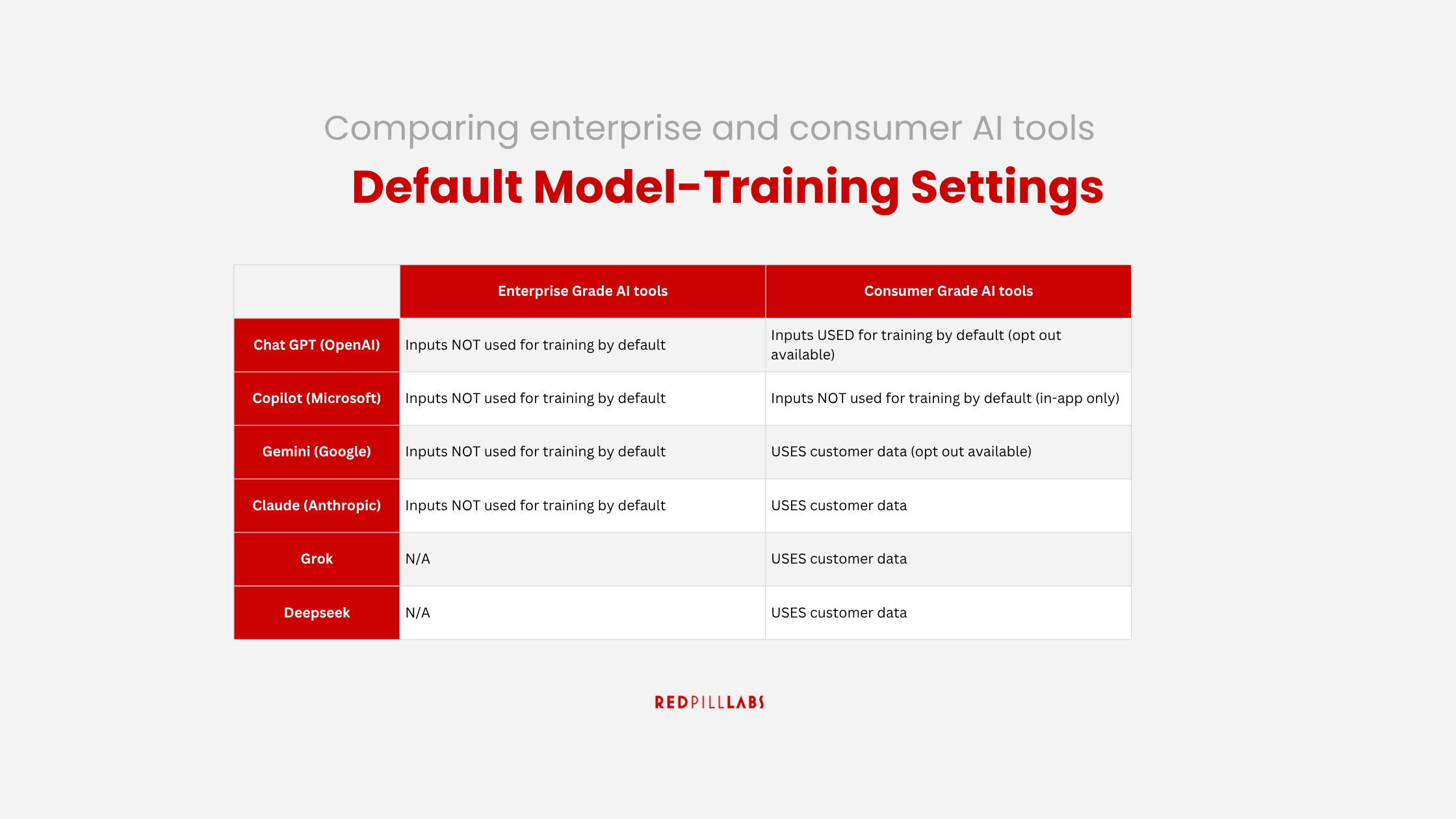

A critical distinction for any organization is how consumer-grade AI tools (e.g., standard ChatGPT, Gemini, etc.) handle user data compared to their enterprise-grade counterparts.

Consumer tools frequently use user inputs for model training by default, whereas enterprise versions are typically configured not to use customer data for training.

This difference is paramount for maintaining data privacy and confidentiality.

For example, regular ChatGPT accounts offer minimal security and train models based on user prompts and data, while ChatGPT Enterprise explicitly ensures conversations are not used for training by default and offers zero data retention policies.

Pillar 3: Mitigating "Shadow AI"

"Shadow AI" refers to the unauthorized use of AI tools within an organization, often when employees bypass official channels due to convenience or a lack of approved alternatives.

This phenomenon is not only growing rapidly but also significantly increases the cost of data breaches.

The primary reason for this increased cost is that shadow AI incidents typically take longer to detect and contain.

Despite escalating risks, a concerning number of organizations that experience breaches do not having an AI governance policy in place.

The widespread prevalence of shadow AI highlights a fundamental dynamic: the appeal of AI tools is so strong that employees will often bypass restrictions, sometimes resorting to personal devices and networks, to leverage their perceived productivity benefits.

This can create a dilemma for organizations: outright banning all AI tools risks stifling innovation and hindering employee efficiency.

The prevalence of shadow AI is a direct consequence of a mismatch between employee demand for powerful, accessible tools and the organization's slow or restrictive provision of secure, approved alternatives.

The solution, therefore, is not prohibition but secure enablement.

Organizations must focus on securely integrating GenAI usage into their operations while simultaneously educating employees about the inherent risks of uploading sensitive data, particularly when using free-tier versions.

This approach requires providing approved, secure enterprise-tier tools and comprehensive training, rather than simply imposing bans that are likely to be circumvented.

Pillar 4: Mission Critical Change Management Approach

Successful AI adoption, especially when prioritizing security and privacy, is fundamentally a change management challenge.

It requires a people-first approach that cultivates trust, fosters transparency, and builds organizational agility.

AI adoption should not be viewed merely as a technical deployment but rather as a strategic, mission-critical organizational transformation.

Traditional change management strategies must evolve to address the unique concerns and complexities introduced by AI integration.

The success of AI initiatives hinges not just on the technology itself, but profoundly on human alignment, effective culture-building, and robust governance, which collectively enable AI insights to take root and scale across the enterprise.

Making AI Work for Everyone: Build Trust and Buy-In at Work

Building Trust:

Building trust among employees is paramount to mitigating resistance to change.

This involves selecting AI solutions that genuinely prioritize user needs, establishing measurable Key Performance Indicators (KPIs) for AI integration to demonstrate tangible value, providing ample opportunities for AI upskilling, and educating employees on AI ethics and responsible use.

Being Transparent:

Employees need to clearly understand the AI technology they are using to be willing to adapt and integrate it into their work.

This means transparently communicating AI objectives, explaining how job functions will transform, offering comprehensive upskilling resources, and establishing clear mechanisms for employees to challenge AI decisions or report ethical concerns.

Transparent, ongoing communication that directly addresses specific stakeholder concerns is vital for fostering buy-in.

Increasing AI Literacy:

Expanding AI literacy across the entire workforce is crucial for successful and secure AI adoption.

This empowerment enables employees to work effectively in partnership with AI, promoting its responsible use and accelerating value generation for the business.

Key Takeaways:

The adoption of Artificial Intelligence, while offering immense transformative potential, is inherently data-intensive and carries significant risks of sensitive information leakage.

The widespread prevalence of "shadow AI" and numerous instances of unintentional data exposure underscore the urgent need for a structured, proactive, and holistic approach to AI security.

A critical understanding for any organization is the fundamental distinction in data handling between consumer-grade and enterprise-grade AI tools, which mandates careful tool selection and continuous employee education.

Effective AI security is not merely a technical challenge; it is a comprehensive organizational imperative that demands robust governance frameworks, clear policy enforcement, and sophisticated change management strategies.

Organizations must fundamentally embrace privacy-by-design principles, embedding data protection and ethical considerations from the earliest stages of AI development and deployment.